What is needed so that robots can work along with humans?

Humans fear the unknown. Contemporary Artificial Intelligence (AI) systems have no way to directly incorporate any of the rules known to humans. Those two issues could be overcome if a way to extract, review, and update the rules governing the AI behavior existed.

The problem

The AI-powered systems will need to communicate with people and follow their regulations so that they can be incorporated into society gracefully and safely. This is especially important in safety-critical domains. Therefore, a mechanism to bind perception (extraction of knowledge from examples) and reasoning (following the rules) is needed, especially since the expectations and applications of the AI-based systems are ever increasing (Lisboa 2001).

The additional aspect of this challenge is that robots, perceived as any ‘intelligent device,’ from automated factories through self-driving cars to toy pets, need to be able to interact with the environment in real-time, without (potentially fatal) response delays. That means their control systems need to be embedded in hardware as much as possible. Neural networks, due to their parallel nature, are suited for hardware implementation and the so-called edge-computing. Therefore, embedding the rules in networks and understanding the rules governing the systems can be a crucial step towards efficient and safe robot design.

Many industries have already realized what potentials and risks are associated with Industry 4.0 and deploying solutions for it. Still, many domains are only starting their race to digitalization for improved efficiency, maintenance, and operation, business continuity, asset management, etc.

For example, in the energy industry, several factors need to be considered. There is a general move towards green energy in many developed countries: the increased use of intermittent renewable sources and the phase-out of other energy sources. The increased attention is given to storage and the investments in new (and smart) transmission and distribution grids. Additional changes in the commercial energy world are driven by trading, new products, and evolving population needs. All of those changes are coupled with the vastly increasing collection and flow of a variety of data. All described factors are posing new challenges to the safety, reliability, and adaptability of energy management and monitoring systems, calling for an Energy 4.0 (Zio 2018). The automatically trained, but verifiable solutions could result in improved trustworthiness and significant economic benefits in this and similar fields.

State of the Art

The field of Neural-Symbolic integration is dealing with the crucial task of transferring knowledge between logic-based and neural-based systems. It is only now gathering interest since it has not been until recently that people realized some challenges cannot be solved with pure AI.

The Neural-Symbolic knowledge transfer process is bidirectional, usually composed of two separate phases: rules extraction and insertion (Hailesilassie 2016). Rules extraction aims at finding the set of rules that can be used to predict the ‘black box’ algorithm behavior as closely as possible for a given dataset. The form of the resulting rules, such as IF-THEN statements or decision trees, can be then used to classify the rules extraction methods. The alternative classification is based on “the relationship between the extracted rule and the architecture of the trained neural network” (Hailesilassie 2016). The methods of extracting rules on neuron-level fall into the decompositional category. The techniques distilling the knowledge from the `black-box’ as a whole are denoted as pedagogical. The third category, eclectics, is a combination of the previous two (Hailesilassie 2016).

Logic rules may be inserted or embedded into a neural system in different ways (Besold et al. 2017), however, two general approaches are predominant: direct and indirect. The first one involves direct modification of the neural network on the architectural level (Tran and d’Avila Garcez 2018) and is usually limited to shallow models such as Restricted Boltzmann Machines or Multilayer Perceptron and fixed to a subset of architectures (Besold et al. 2017). The second one is much more general in terms of targeted neural models and addressing a broader range of architectures. It employs different forms of training with a custom-designed and dedicated loss function, where the rule-based knowledge diffuses to the neural model through data generated by the rule-based system.

At the end of the day, the common denominator of the rule-based and neural approach is the representation and the resources essential to efficiently store and process the data. One may argue that the storage and processing capabilities are growing. However, when considering robotics and real-time safety-critical systems, resource consumption and latency are critical, which brings us back the very architecture of the system at a low level. An example of such a system is the Large Hadron Collider (LHC), wherein some cases only high-speed system response can prevent equipment destruction. The European Organization for Nuclear Research (CERN) quench protection team employs the rule-based approach, which proved its effectiveness.

Most of the state-of-the-art rules extraction and injection techniques are often designed for specific network architectures and particular domains, and therefore not easily adaptable to new applications. With the emergence of Deep Learning, rules extraction, and interpretability methods that can work for networks with thousands of neurons are in high demand.

The idea

My idea for solving this problem is the creation of the Generative AdveRsarial Rules ExTractor (GARRET). It is inspired by the capabilities of the Generative Adversarial Networks (GANs) and Reinforcement Learning (RL). GANs allow generating structured data according to the desired distribution, which is shaped in the process of zero-sum game training. Reinforcement Learning as a technique will enable systems of agents to automatically adjust their behavior to maximize their overall performance.

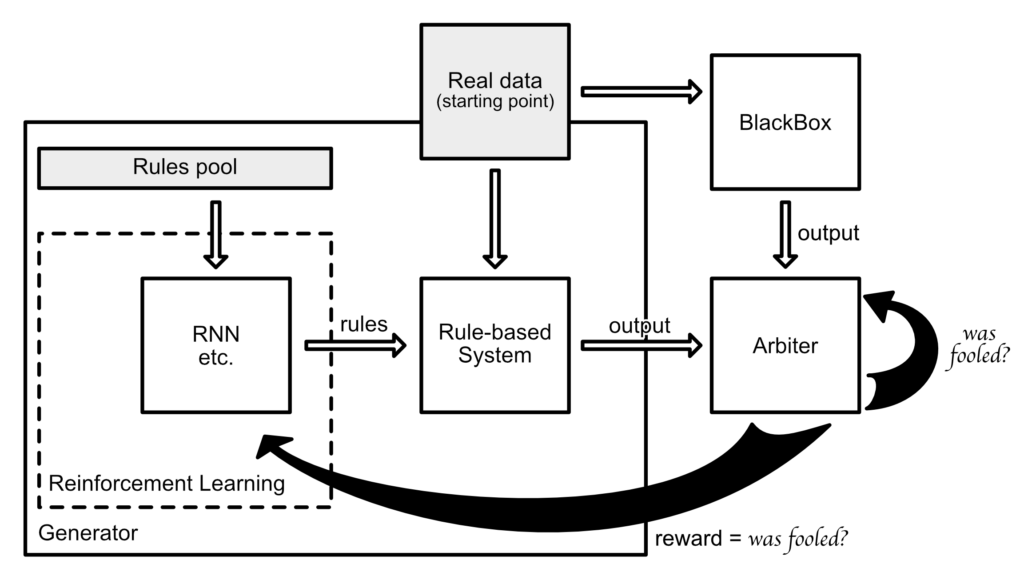

The system consists of the two main modules, the Generator, and the Arbiter, with the ‘black-box’ neural algorithm as a third component. When the system is utilized as a rules extractor, the Arbiter learns to distinguish between output coming from the ‘black-box’ algorithm and output coming from the Generator. As a result, the set of rules explaining the behavior of the ‘black-box’ is created (as such, GARRET can be classified as a pedagogical approach). The Generator itself consists of several parts:

- the outer layer is an RL algorithm, that takes its success in fooling the Arbiter as its reward signal,

- RL will be used to update the underlying algorithm (a type of Recurrent Neural Network) that is used to generate (encoded) rules,

- the generated rules are then decoded and fed into the rule-based system, which is used to compute the next state(s) and/or output(s) based on the real starting point, and finally

- those outputs are used to try to fool the Arbiter.

The rules injection builds upon the rules extraction. It is based on the idea of inserting the target rules into the set generated by the RL, while simultaneously removing the undesired ones. The output of the rule-based system can then be used to fine-tune the ‘black box,’ teaching it to behave in a specified way.

Summary

The AI-powered systems will need to communicate with people and follow external regulations so that they can be gracefully and safely integrated into society. Contemporary Artificial Intelligence (AI) systems have no way to directly incorporate any of the rules known to humans. In this short post, I described the idea of addressing the challenge of rules extraction from and injection into the ‘black-box’ neural networks in the form of the adaptable Neural-Symbolic integration appropriate for the application in Industry 4.0. The proposed Generative AdveRsarial Rules ExTractor (GARRET) is inspired by the capabilities of the Generative Adversarial Networks (GANs) and Reinforcement Learning (RL). Such a system may find its application in e.g., risk assessment and predictive maintenance fields and can be considered a stepping stone for Neural-Symbolic integration introduction to robotics.

References

- Besold, Tarek R., Artur d’Avila Garcez, Sebastian Bader, Howard Bowman, Pedro Domingos, Pascal Hitzler, Kai-Uwe Kuehnberger, et al. 2017. “Neural-Symbolic Learning and Reasoning: A Survey and Interpretation.” ArXiv:1711.03902. https://arxiv.org/abs/1711.03902.

- Hailesilassie, Tameru. 2016. “Rule Extraction Algorithm for Deep Neural Networks: A Review.” International Journal of Computer Science and Information Security 14 (7): 376–81. https://arxiv.org/abs/1610.05267.

- Lisboa, Paulo J.G. 2001. Industrial Use of Safety-Related Artificial Neural Networks. Vol. 327. HSE Contract Research Report. Health and Safety Executive. https://www.hse.gov.uk/research/crr_pdf/2001/crr01327.pdf.

- Tran, Son N., and Artur S. d’Avila Garcez. 2018. “Deep Logic Networks: Inserting and Extracting Knowledge From Deep Belief Networks.” IEEE Transactions on Neural Networks and Learning Systems. Institute of Electrical and Electronics Engineers (IEEE). https://doi.org/10.1109/tnnls.2016.2603784.

- Zio, E. 2018. “The Future of Risk Assessment.” Reliability Engineering & System Safety, September, 176–90. https://doi.org/10.1016/j.ress.2018.04.020.