The role of SLAM (Simultaneous Localization and Mapping) algorithms, when integrated with LiDAR (Light Detection and Ranging) technology, is crucial across various applications, including autonomous vehicles, robotics, and environmental mapping. SLAM is a computational technique that enables a device to map an unknown environment while concurrently determining its location. Utilizing LiDAR sensors, SLAM leverages high-resolution distance data to achieve precise localization and detailed environmental mapping.

This is the third part of the Point Cloud Fundamentals topic in Deep Learning Techniques in Point Clouds Series. See Understanding the Nature of Point Cloud Data, and Data Acquisition Methods for Point Clouds for other parts of this topic!

How Undistortion Works

Undistortion is a critical preprocessing step in LiDAR data processing for Simultaneous Localization and Mapping (SLAM) applications, particularly in dynamic environments such as autonomous driving. This process corrects the data acquired during a LiDAR sweep to account for the sensor’s motion, ensuring a more accurate representation of the surrounding environment.

Motion Prediction

The first step in undistortion involves predicting the sensor’s motion during the sweep. This is crucial for estimating how the sensor’s position and orientation change, using high-frequency IMU data or odometry information.

Time Stamp Matching

Each point in a LiDAR sweep is associated with a timestamp, indicating when it was collected. By matching these timestamps with the predicted sensor motion, one can interpolate the sensor’s position at the exact moment each point was recorded.

Point Correction

After estimating the sensor’s motion, each point’s position is corrected by adjusting its coordinates based on the predicted sensor position and orientation at the point’s timestamp. This process effectively translates and rotates the points to represent the environment as if the sensor had been stationary.

Integration into SLAM

The undistorted points are then integrated into the SLAM system, providing a more accurate map of the environment and improving the vehicle’s localization.

Real-time Performance

Efficient undistortion algorithms are essential for processing LiDAR data quickly enough for real-time applications such as autonomous driving, where timely decision-making is critical.

Robustness

Undistortion makes the SLAM system more robust to various movements, whether accelerating, decelerating, or turning, ensuring the system functions reliably under different scenarios.

Summary

Undistortion is fundamental in SLAM systems, especially for dynamic applications like autonomous vehicles. It corrects for sensor motion during a LiDAR sweep, ensuring points accurately represent the environment, which is paramount for accuracy, real-time performance, and robustness in mapping and localization.

Feature Point Selection in SLAM

Once the SLAM system has corrected the distortions in the data from a LiDAR sweep, the next crucial step is identifying specific points to be used for estimating its pose – its position and orientation in space. This step is vital because processing the entire set of points collected in one sweep, which often amounts to hundreds of thousands, would require prohibitive computing power.

Identification of Feature Points

To manage this computational challenge, some 3D LiDAR SLAM approaches distinguish certain points as feature points. It’s important to note that these feature points differ from the visual feature points used in Visual SLAM. The selection of feature points is a key differentiator among various SLAM approaches.

Differentiation Among SLAM Approaches

For instance, LOAM (LiDAR Odometry and Mapping), one of the most well-known 3D LiDAR SLAM methods, specifically extracts points that lie on planes (planar points) and points that are located on edges (edge points). Similarly, LeGO-LOAM extends this approach by identifying feature points representing the ground.

Dynamic Object Handling

Moreover, some SLAM systems improve their resilience and accuracy by excluding points originating from dynamic objects, like cars and pedestrians. By focusing solely on static and visible points over extended periods, these systems aim to enhance the reliability of the mapping and localization process.

Importance of Feature Point Selection

The process of feature point selection is not merely a computational necessity; it is also a strategic choice that significantly influences the performance and applicability of a SLAM system. Efficiently selected feature points can dramatically reduce the computational load while ensuring the accuracy and robustness of the pose estimation and map construction processes.

Summary

Identifying and selecting feature points within a SLAM system is crucial for managing the computational complexity inherent in processing large volumes of LiDAR data. This process is a distinctive aspect of various SLAM methodologies, with specific approaches like LOAM and LeGO-LOAM demonstrating the strategic importance of feature point extraction. By judiciously selecting points that provide the most significant information for pose estimation and map construction, and by excluding points associated with dynamic objects, SLAM systems can achieve a balance between computational efficiency and mapping accuracy, paving the way for their effective application in dynamic environments such as autonomous driving.

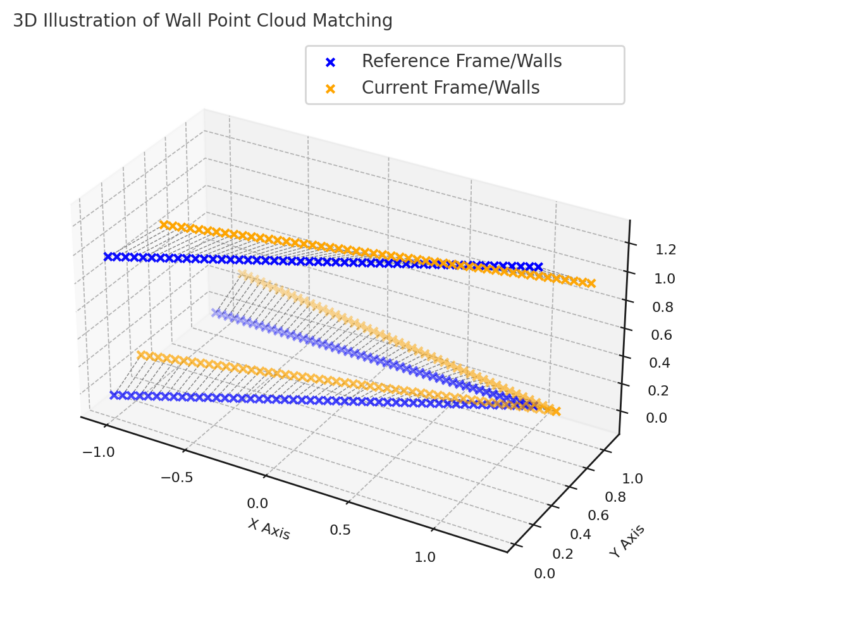

Frame Matching

Frame matching represents the final step in determining the pose from the current frame. In this context, a frame refers to point clouds collected in one sweep. The SLAM system achieves pose estimation by matching the current frame against one or more reference frames. These reference frames might be the immediately preceding frame, several previous frames, or the cumulative map generated up to that point. Identifying corresponding feature points between the current and reference frames is crucial to this process. Typically, the system establishes a correspondence by identifying the feature point in the reference frame closest to a given feature point in the current frame.

Finding Correspondences

Once correspondences have been established, the system addresses the following question: “To align all correspondences between the current frame and the reference as closely as possible, what must the current sensor pose be?” This alignment task is scan matching and involves iterative calculations minimizing the distance between correspondences.

Scan Matching Techniques

Two primary approaches dominate scan matching techniques:

- NDT (Normal Distributions Transform): This approach models the environment using normal distributions, facilitating the matching process by transforming and aligning point clouds probabilistically.

- ICP (Iterative Closest Point): ICP iteratively refines the pose estimate by minimizing the distance between corresponding points across the current and reference frames until convergence.

Both techniques iteratively adjust the sensor pose to best align the current frame with the reference, each employing its method to minimize the discrepancy between corresponding points.

Map Expansion

The subsequent phase in the SLAM sequence is the expansion of the map. This stage is often regarded as more straightforward compared to the previous steps. In map expansion, the SLAM system, armed with knowledge of the current LiDAR pose and the spatial coordinates of all points in the current frame, augments the pre-existing map with these new points. This enriched map then serves as a reference for frame matching in successive rounds of pose estimation, facilitating a continuous refinement of the system’s spatial understanding.

Additional Processes

The fundamental operations of 3D LiDAR SLAM have been delineated above. However, to present a more comprehensive overview, we must consider two additional crucial processes: loop closure and re-localization. These components are essential for full-fledged 3D LiDAR SLAM functionality and resemble corresponding processes in Visual SLAM, underscoring the shared methodologies between the two SLAM modalities.

Loop Closure

Loop closure involves detecting when the LiDAR revisits a previously mapped area. This detection is critical for correcting any accumulated drift from sequential pose estimations. By identifying loop closures, the SLAM system can adjust the map to ensure consistency and continuity, thus maintaining the integrity of the environmental representation.

Re-localization

Re-localization is the process that allows the SLAM system to ascertain its position within the map after a loss of tracking or when re-entering a mapped area after a period of absence. It ensures the system’s robustness by enabling it to recover from disorientations and continue its mapping and navigation tasks without interruption.

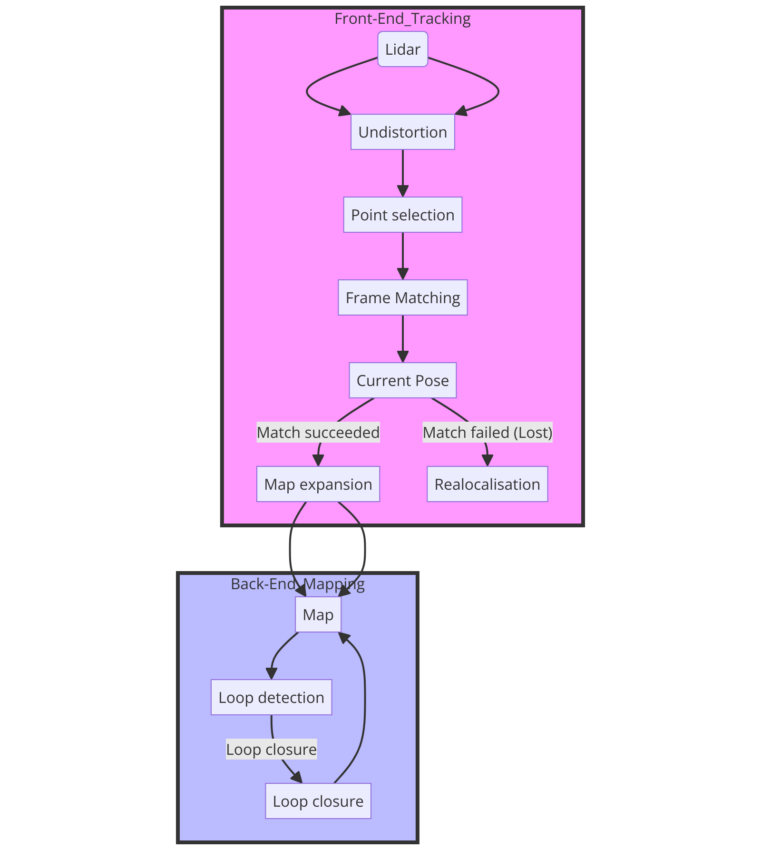

Overall schematic of the process

The figure below presents a structured overview of a 3D LiDAR SLAM (Simultaneous Localization and Mapping) system. It is divided into two main components: Front-End Tracking and Back-End Mapping.

The Front-End Tracking starts with a LiDAR sensor that captures the environmental data, which is then processed through several stages including undistortion and point selection, leading to frame matching. Depending on whether a match is found, the system either advances to map expansion or enters a relocalization phase.

On the other hand, Back-End Mapping is concerned with expanding the map with new data, detecting loops to understand when the device returns to a previously mapped area, and performing loop closure to correct the map. This process continually updates and refines the map, ensuring accurate localization and mapping.

Conclusion

Throughout this discussion, we have traversed the landscape of 3D LiDAR SLAM, uncovering the intricacies of each step within this complex process. Starting with undistortion, which ensures a sharp representation of the surroundings by accounting for sensor movement, we moved on to feature point selection, highlighting the SLAM system’s strategic choice to minimize computational demand while maximizing accuracy. We then delved into frame matching, the crux of pose estimation, demonstrating how the SLAM system aligns the current frame with a reference frame to deduce the sensor’s pose.

Map expansion was revealed to be a more straightforward, albeit essential, process, where the SLAM system continuously enhances the map with newly acquired data points. This growing map becomes pivotal for future frame matching and pose estimations. Furthermore, we broadened our understanding by incorporating the processes of loop closure and re-localization, which are vital for maintaining the SLAM system’s accuracy and robustness over time. The convergence of these processes manifests a SLAM system that is both sophisticated and capable.